Amarisoft MME on Google Cloud

The purpose of this tutorial is to show you how to setup mme on Google Cloud Virtual Machine and configure Callbox (gNB) connected to the mme (5G Core) on Cloud. The purpose of this tutorial is to show a sample case where Amarisoft Core is installed on a cloud which is located far away from RAN. This kind of the setup would introduce some factors that does not come into play in regular setup where RAN and Core are installed on the same PC. Those factors are as follows : My motivation was to check if all of these factors (challenges) are properly handled and overcome.

- Large delay between RAN and Core. When I was testing this setup, RAN (Amarisoft gNB/eNB) was at my home and Core was installed on Cloud which is about 500 km away from my home. It will introduce much larger delay (both C-plane and U-plane) comparing to regular setup (i.e, RAN + Core in the same box)

- Network Address Translation. In this setup, the RAN PC was connected to internet over WiFi at my home which need to go through IP translation between the local IP at my home and global IP assigned to WiFi router. Also the IP on the cloud was configured to be translated between internal IP and External IP.

- Firewall Requirement on Cloud. The network setup on the cloud is not confired for this kind of application by default. So it need to be reconfigured to pass through all the protocols (e.g, SCTP) which are required for communication between RAN and Core.

Table of Contents

- Amarisoft MME on Google Cloud

Introduction

Deploying mobile network core components, such as the Mobility Management Entity (MME) and Amarisoft 5G Core, on cloud infrastructure introduces new paradigms for wireless network architecture, enabling flexibility, scalability, and remote management. This tutorial focuses on the technical process of setting up the MME on a Google Cloud Virtual Machine (VM) and configuring a remote Radio Access Network (RAN) component—specifically, the Callbox (gNB) by Amarisoft—to interface with the cloud-based core. Traditionally, RAN and core network elements are physically co-located or installed on the same local hardware, minimizing latency and network complexity. However, cloud deployment scenarios, where the core is hosted remotely (in this case, approximately 500 km from the RAN), present unique challenges such as increased control and user plane latency, Network Address Translation (NAT) traversal, and specialized firewall configurations to allow protocols like SCTP. These factors are not typically encountered in local deployments but are critical in real-world, distributed, cloud-native mobile networks. Amarisoft’s licensing model, which requires a license server rather than fixed licenses for cloud and virtualized installations, further emphasizes the shift towards dynamic, scalable networking. Understanding and addressing these architectural differences is essential for network engineers and researchers exploring the deployment of 5G core networks in the cloud, as it provides practical insights into the impact of network topology, latency, IP management, and security requirements on end-to-end mobile network performance.

-

Context and Background

- Technology Overview: The tutorial centers on deploying Amarisoft 5G Core (including MME) on a Google Cloud VM and connecting a remote Amarisoft Callbox (gNB/eNB), forming a distributed 5G/LTE network architecture.

- Architectural Considerations: The RAN (gNB/eNB) is geographically separated from the core, introducing WAN-induced latency, varying IP addressing schemes, and complex firewall/NAT traversal requirements.

- Licensing Implications: Amarisoft products require license server authentication for cloud or virtual machine installations; fixed licenses are not supported in these environments.

-

Relevance and Importance

- Demonstrates real-world deployment scenarios: Simulates practical cases where RAN and core functions are separated by significant physical distance, reflecting trends in network disaggregation and cloudification.

- Addresses operational challenges: Highlights how factors like delay, NAT, and firewall traversal can impact network performance and connectivity in cloud-based mobile networks.

- Proof of concept for cloud-native mobile architectures: Provides hands-on experience and validation of cloud deployment feasibility for mobile core networks.

-

Learning Outcomes

- Understand cloud-based mobile core deployment: Learn how to install and configure Amarisoft MME/5G Core on a Google Cloud VM.

- Connect a remote gNB/eNB to the cloud core: Master the configuration of Callbox (gNB) to interface with a geographically distant core.

- Troubleshoot real-world network challenges: Gain practical skills in resolving issues related to latency, NAT, and firewall traversal in distributed telecom networks.

- Evaluate the impact of network topology: Assess how WAN connectivity influences control and user plane performance in 5G/LTE networks.

-

Prerequisite Knowledge and Skills

- Familiarity with 4G/5G core network concepts (e.g., MME, gNB/eNB, SCTP, S1AP, NGAP protocols).

- Basic experience with Google Cloud Platform (VM provisioning, firewall configuration, networking).

- Understanding of IP networking (NAT, public/private addressing, routing).

- General Linux system administration (installation, service management, network configuration).

- Access to Amarisoft software and valid license server credentials for cloud/virtualized deployments.

Summary of the Tutorial

This tutorial outlines the end-to-end procedure for configuring and testing connectivity between an Amarisoft MME running on a Google Cloud virtual machine and a remote gNB (callbox), with a focus on both signaling and user traffic across networks with Network Address Translation (NAT).

-

Test Setup and Environment Preparation:

- Provision a Google Cloud VM and set up a Linux environment (preferably Ubuntu Server 20.04 for compatibility with Amarisoft MME and Open5GS).

- Install Amarisoft MME on the cloud VM, ensuring proper license activation and confirming service status using service lte restart and process monitoring tools.

- Prepare a remote callbox (gNB) connected to a separate local network.

- Ensure both systems (Cloud and Callbox) are reachable via their respective local and public IPs.

-

Key Network Configuration Parameters:

- Configure the following in the respective configuration files (mme-ims-cloud.cfg for MME and gnb-sa-mme-cloud.cfg for gNB):

- gtp_addr: Local GTP address of each node.

- gtp_ext_addr: Public/external IP address of each node (critical for traversing NAT).

- Modify only the gtp_addr as needed, keeping other parameters at default unless otherwise required.

- Configure the following in the respective configuration files (mme-ims-cloud.cfg for MME and gnb-sa-mme-cloud.cfg for gNB):

-

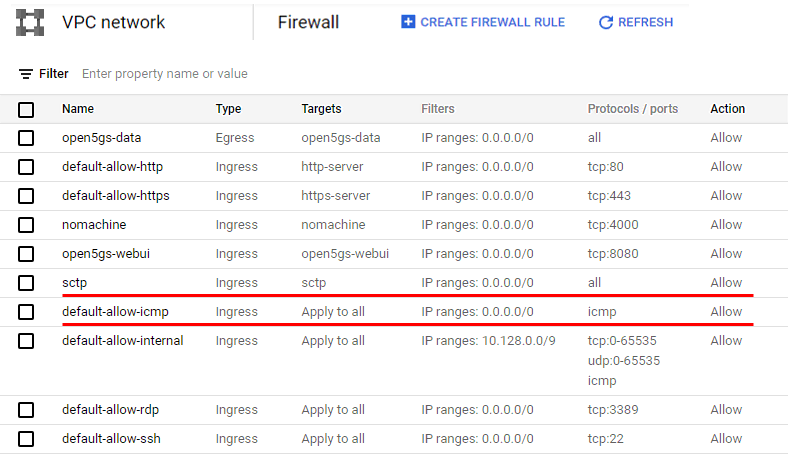

Firewall and Routing Configuration:

- Update Google Cloud firewall rules to permit signaling traffic between the callbox and the MME VM (add specific rules as necessary in addition to defaults).

- Review and record the routing table after MME installation for troubleshooting connectivity issues.

-

Service Launch and Connectivity Test Procedure:

- Start the MME service on the cloud VM, ensuring it is running without errors.

- Start the LTE (gNB/eNB) service on the callbox after confirming the MME is operational.

- Verify cell configuration on the callbox; detailed cell settings are not crucial for this test as long as they permit UE connectivity.

- Power on a UE and observe its connection status to confirm successful registration with the MME on the cloud.

-

Log Analysis and Verification:

- Check NGAP connection logs on the callbox to ensure that the connection is established with the external IP of the cloud VM.

- Confirm that all NGAP signaling is routed through the correct external/public IP addresses.

-

User Traffic Configuration and Testing:

- To enable user-plane traffic across NAT, set gtp_ext_addr in both mme.cfg (cloud) and enb.cfg (callbox) to the respective public IPs of the cloud VM and callbox network.

- Obtain public IP addresses via cloud VM info and online IP lookup services for the callbox network.

- After configuration, initiate IP traffic (e.g., ping, browsing, streaming) from UE to verify data transmission over GTPU between MME and gNB/eNB.

Conclusion: This test procedure ensures robust connectivity for both signaling and user traffic between a cloud-hosted core network and a remote radio node, with special attention to the challenges posed by NAT and firewall configurations. Proper adjustment of gtp_ext_addr parameters is essential for successful user-plane data flow.

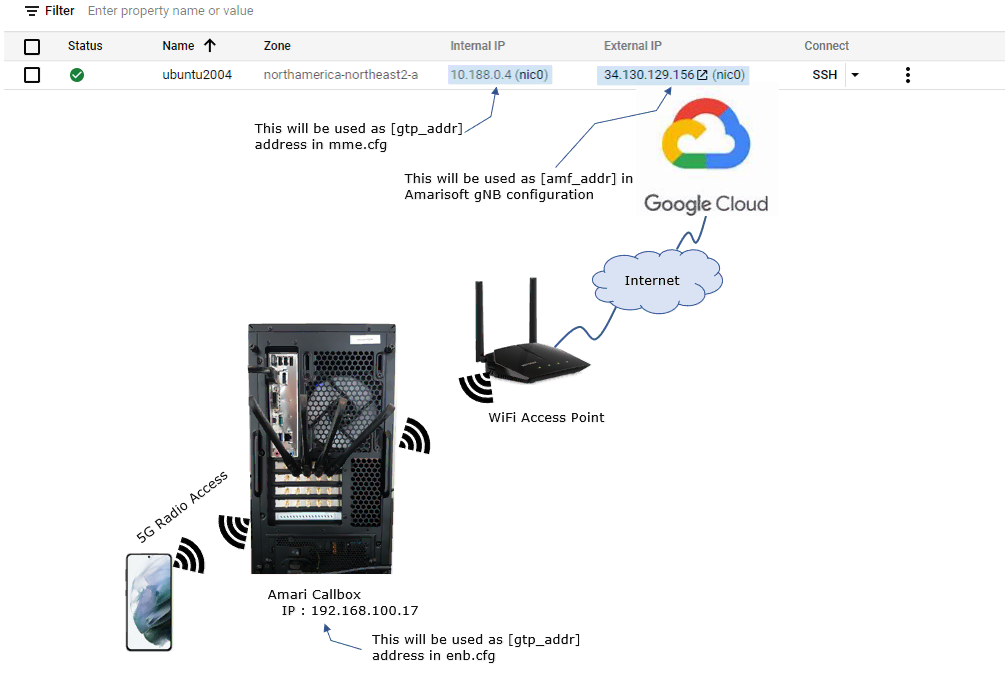

Test Setup

Test setup for this tutorial is as shown below. I assume that you would know on how to setup an account and virtual machine on Google Cloud and how to install mme on a Linux PC.

Key Configuration Parameters

Followings are important configuration parameters for this tutorial. You may click on the items for the descriptions from Amarisoft documents.

- gtp_addr

- gtp_ext_addr (mme)

- gtp_ext_addr (enb)

Configuration

The difficult part of the configuration is that the core (MME) and gNB are located out side of the each other's network. On top of it, the virtual machine on cloud and gNB(callbox) is connected to their own local network (i.e, not the global IP). So you need to figure out various things to get those local IPs to talk to each other.

Cloud

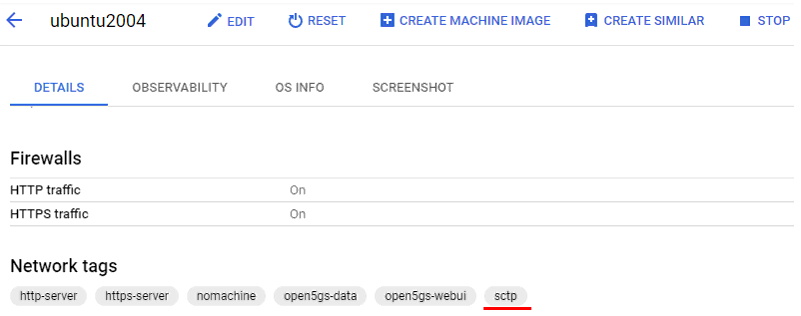

First thing you need to make it sure is to configure all the necessary firwall setting to allow the signaling traffic to go through between the callbox at home/office and core network on Cloud.

Following is all the firewall setting on the my cloud setup. For this tutorial, you should add at least two items underlined in red as shown below in addition to the default firewall setting.

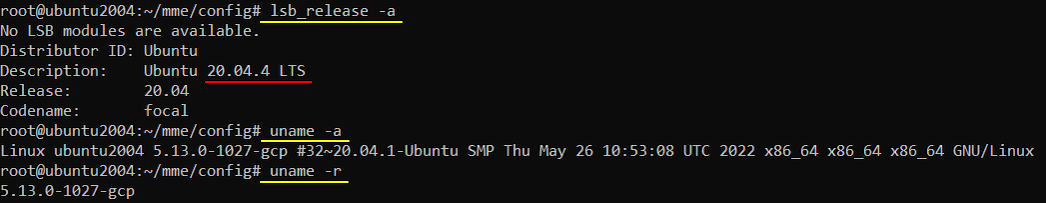

Some OS related information that I used in this tutorial is as shown below. Just for Amarisoft MME, you may use various other Linux distribution, but I am using the specific Linux distribution as shown below in order to use it not only for Amarisoft MME but also for the opensource core network called open5gs (As of Jun 2022, it seems open5gs works only in Ubuntu Server 20.04. I have tried with Ubuntu Server 22.04 but installation didn't go through. Ubuntu Desktop didn't work either for Open5GS)

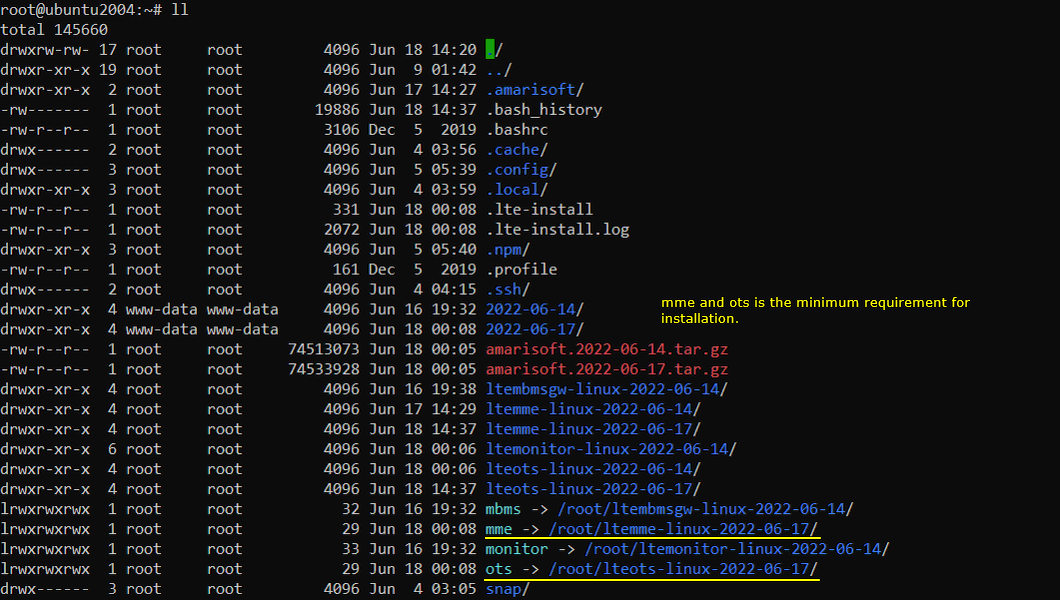

Install mme only as shown below. (NOTE : monitor and mbms are not required)

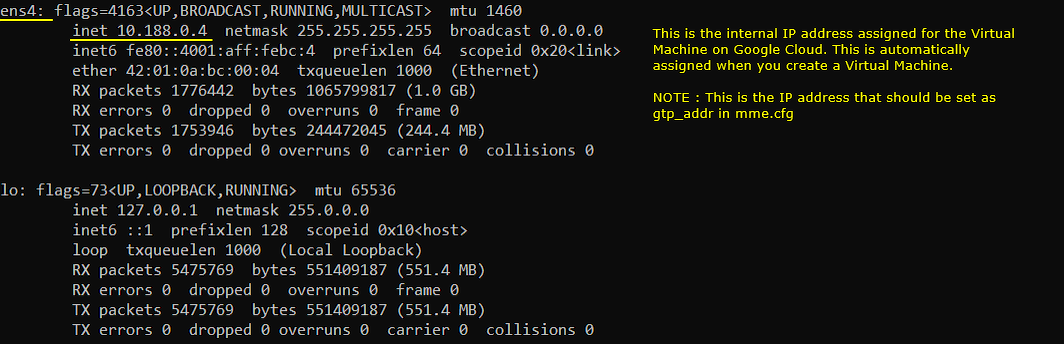

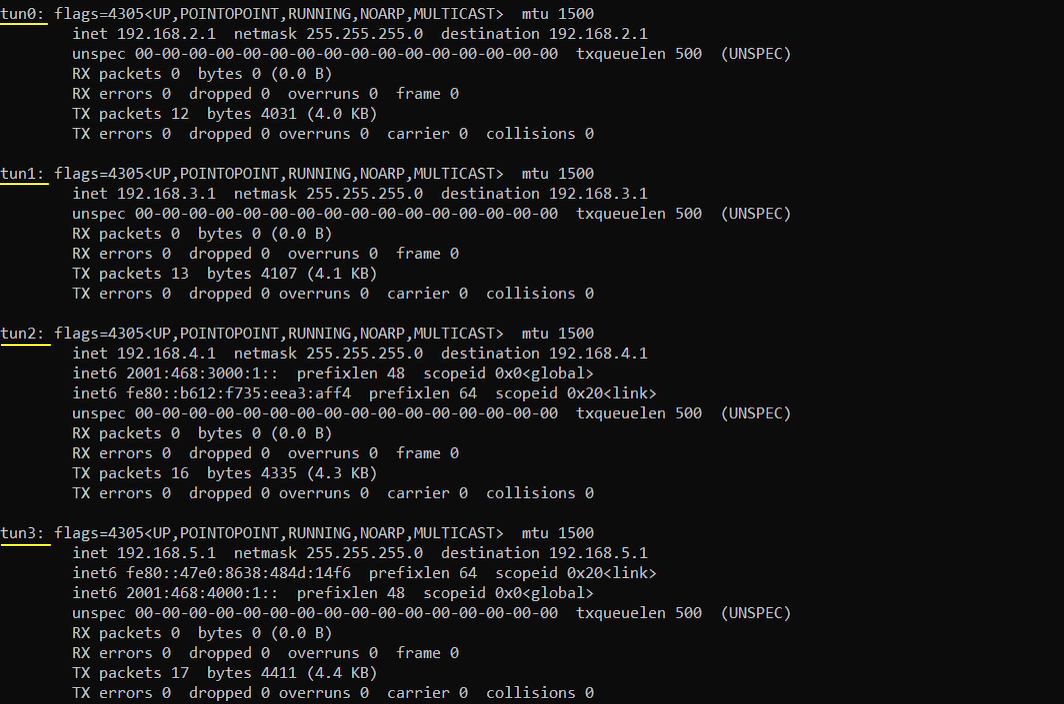

If you installed Amarisoft MME successfully on the cloud and the license is setup properly, you would see the network configurations as shown below

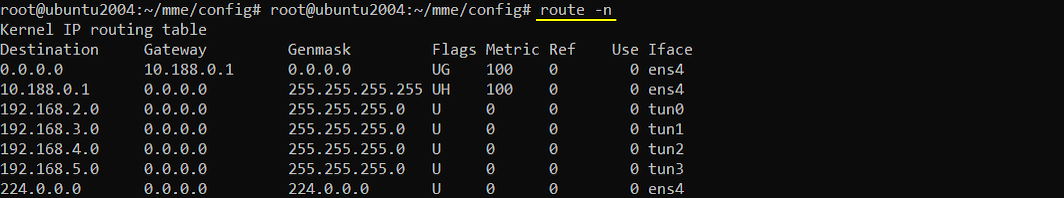

Routing table after the installation of Amarisoft MME would be as follows.

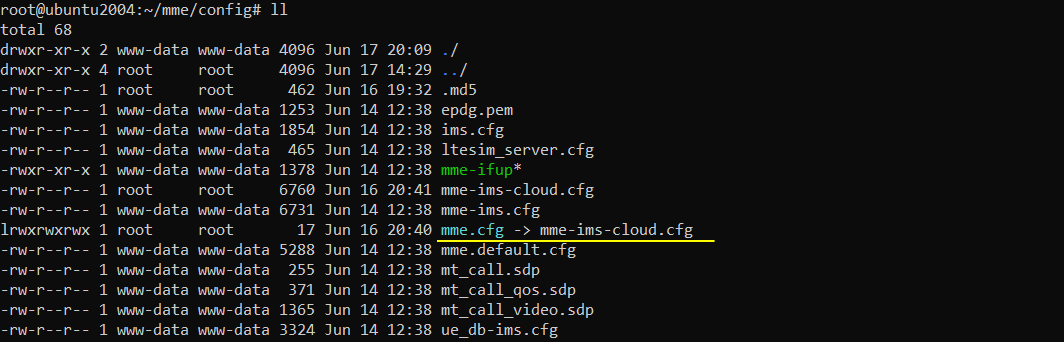

I used mme-ims-cloud.cfg which is copied and modified from mme-ims.cfg

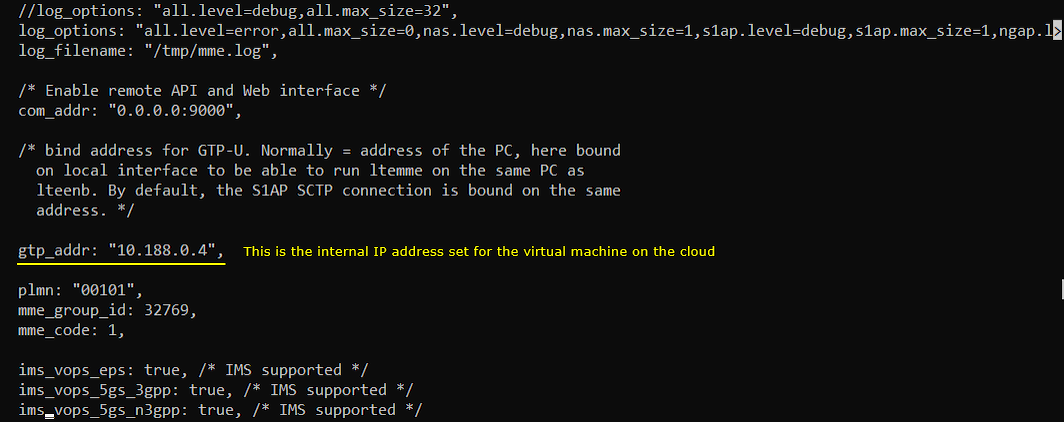

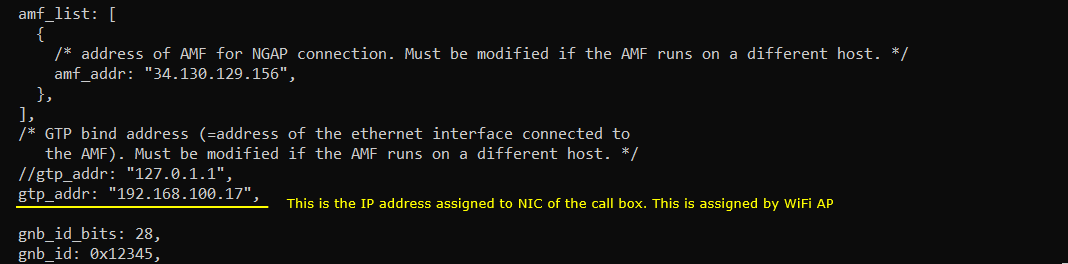

Following is the configurations in mme-ims-cloud.cfg . I changed gtp_addr only and keep everything as default.

Callbox

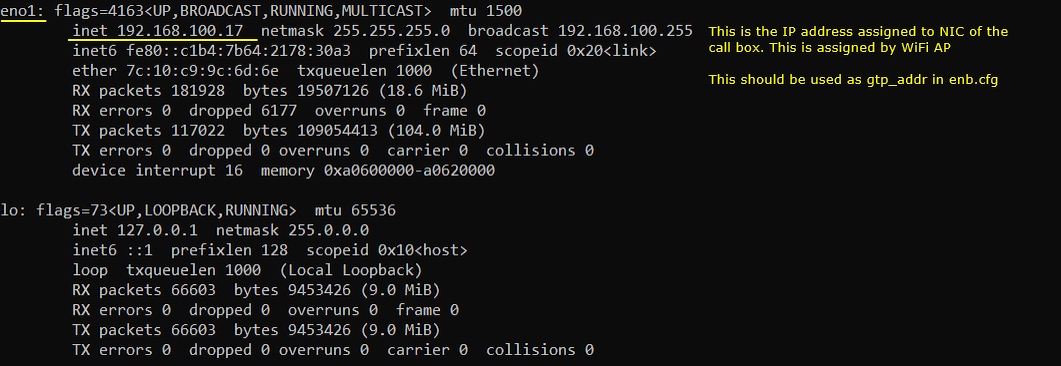

The network configuration of the callbox should be as follows

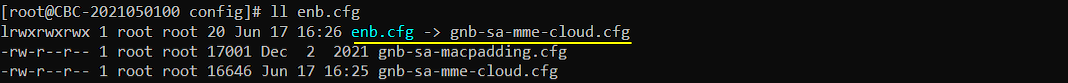

I used the gnb-sa-mme-cloud.cfg which is copied and modified from gnb-sa.cfg

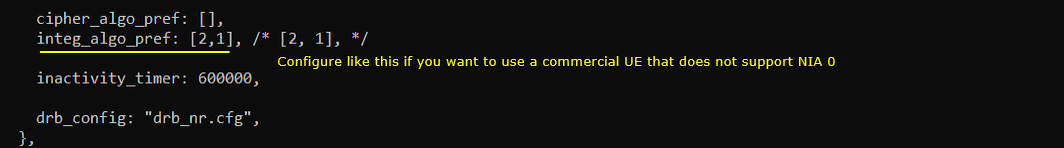

The configuration in gnb-sa-mme-cloud.cfg is as follows.

Run

Since gNB and mme is located in different places, you cannot run both of them using a single lte service. You need to launch mme and gNB/eNB separtely on each system(i.e, Cloud PC and Callbox PC). I would suggest you to run the mme first and then run the service on callbox since MME should be ready before gNB/eNB launches.

mme on Cloud

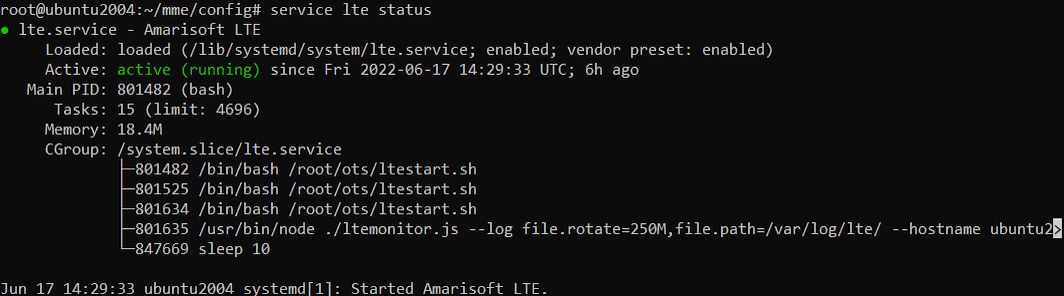

Make it sure that mme is running as shown below. If you don't see it running, run it using the command 'service lte restart'.

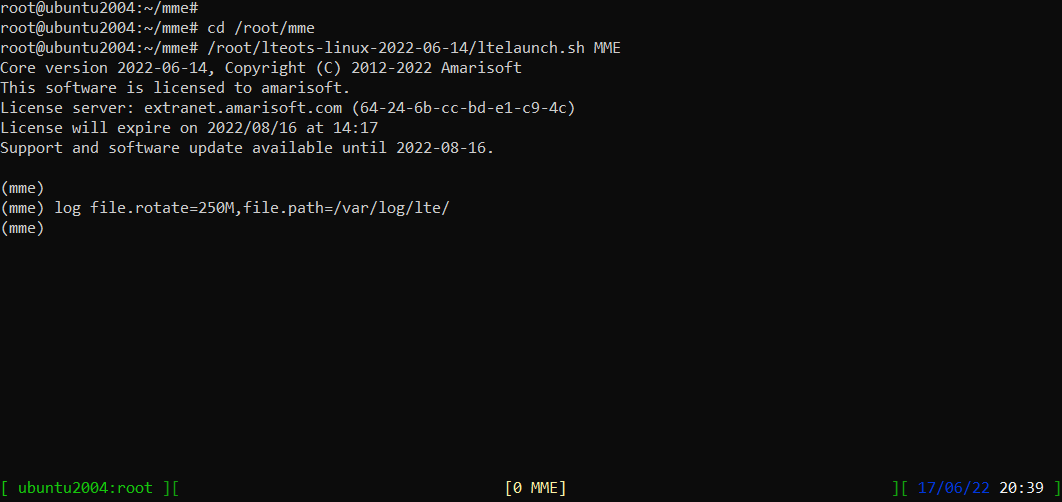

Run the screen and confirm that MME is running without any error.

Callbox

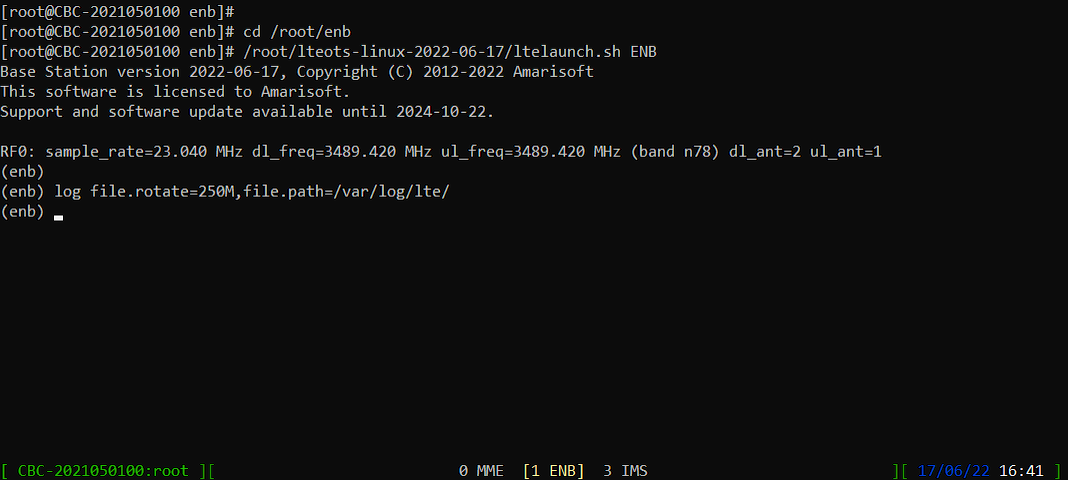

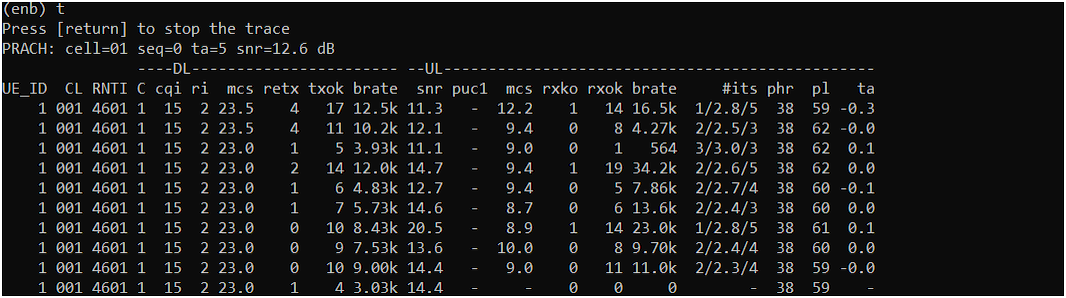

Run lte service on callbox and go to [ENB] screen as follows

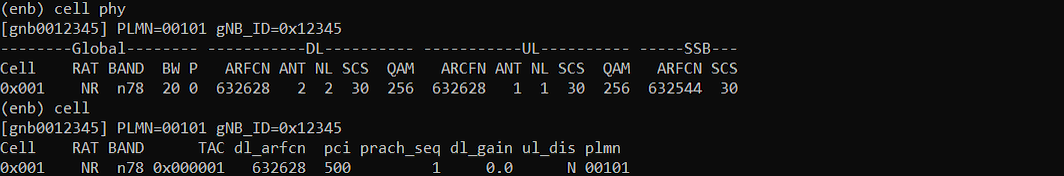

Check the cell configuration and see if it is configured as you want. For this tutorial, the cell configuration is not so important as long as it works with your UE since the main purpose of this tutorial is for testing core network connectivity.

Turn on UE and check if it is get connected as shown below.

Log Analysis

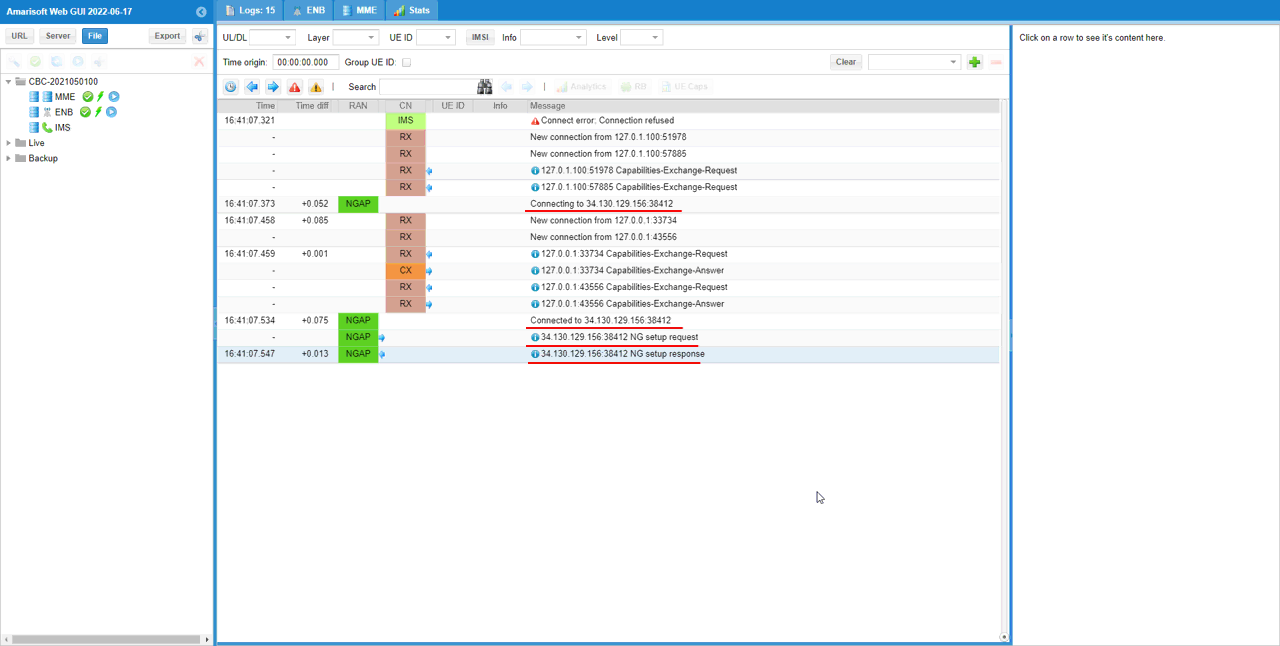

As soon as you start the lte service on the callbox and if the gNB is successfully connected to the mme on Cloud, you should see the NGAP connection as shown below (You should see the NGAP is connected to the external IP of the virtual machine on the Cloud).

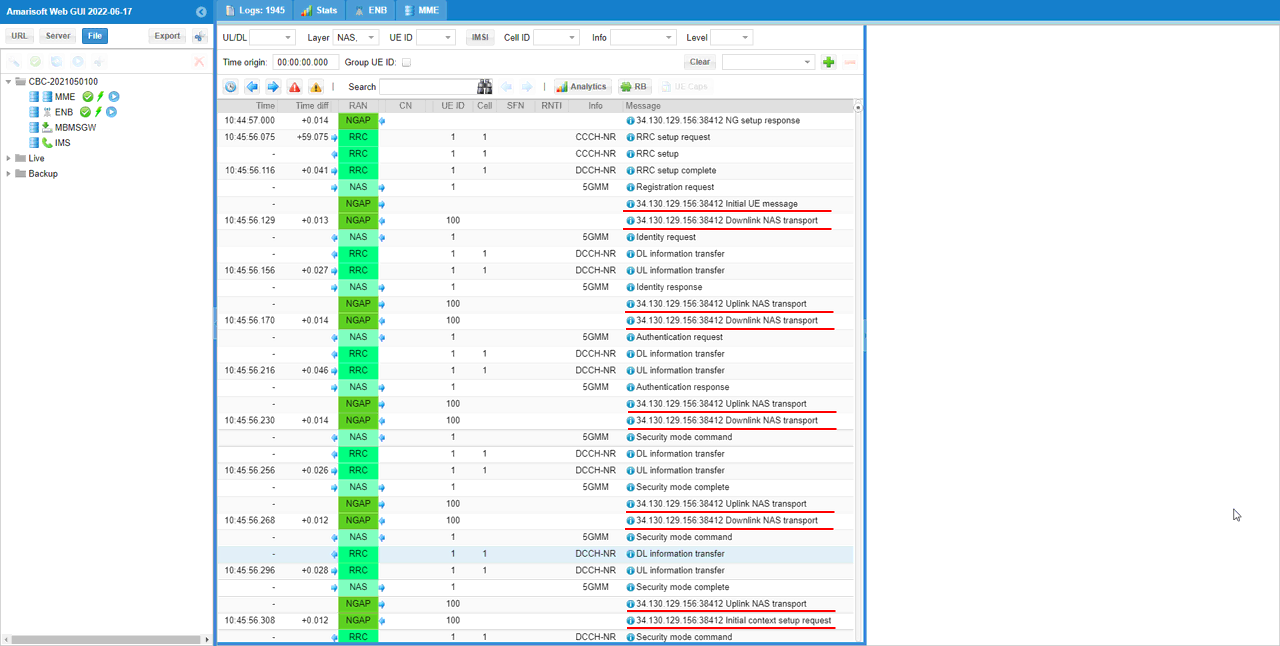

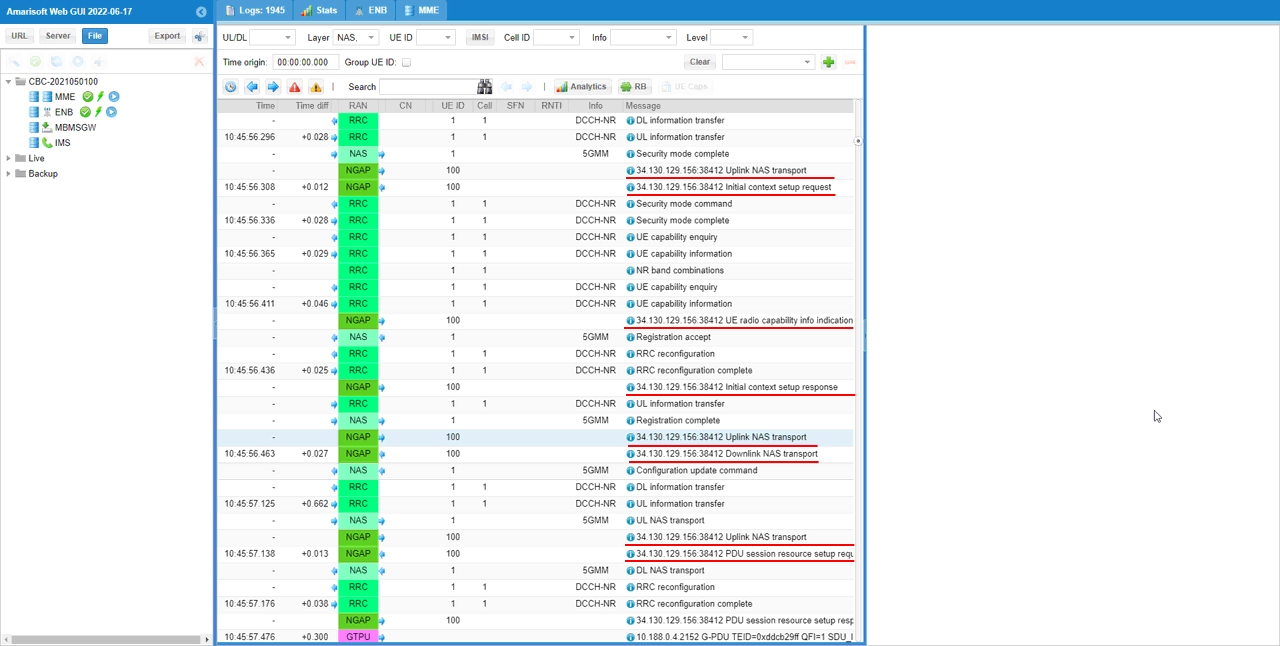

Once the initial registration of the UE is complete, you should see that all the NGAP signaling is done with the external IP of the virtual machine on Cloud.

Tips

The test setup described above would work with all the signaling messages without any problem, but user traffic would not go through due to Network Address Translation on both the network on Cloud and the network where Callbox is located. Luckily Amarisoft gNB and Core network provides the configuration to work around this issue as shown below.

Setting up for User Traffic

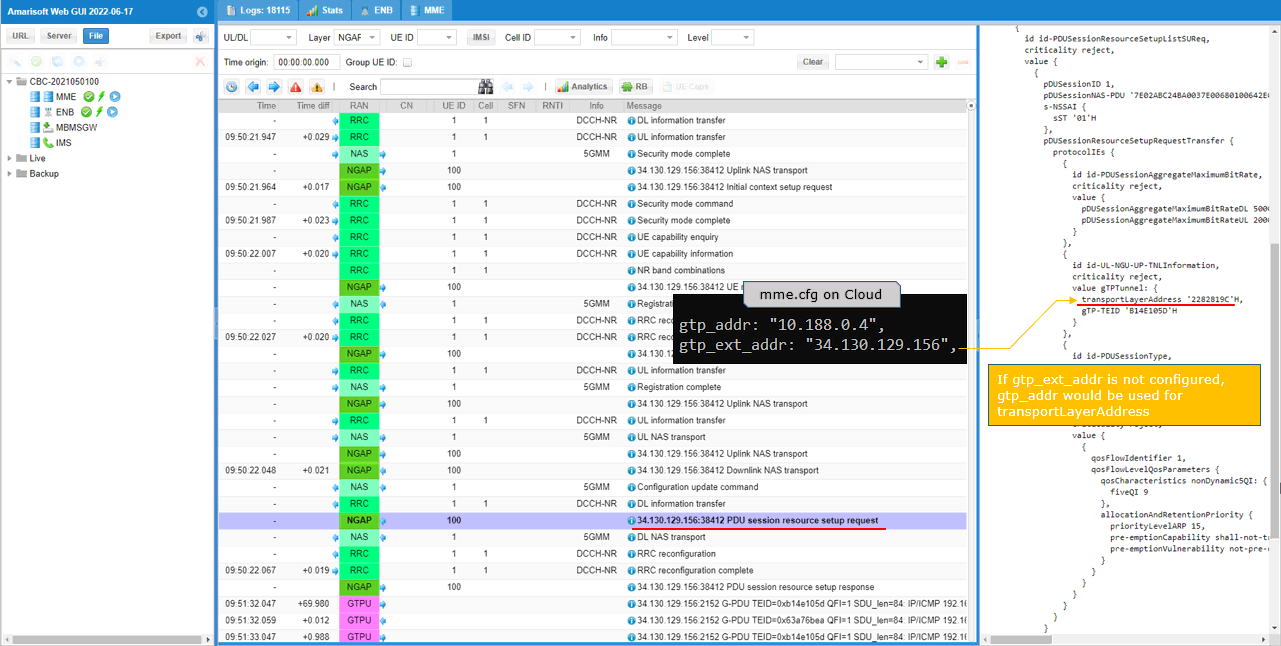

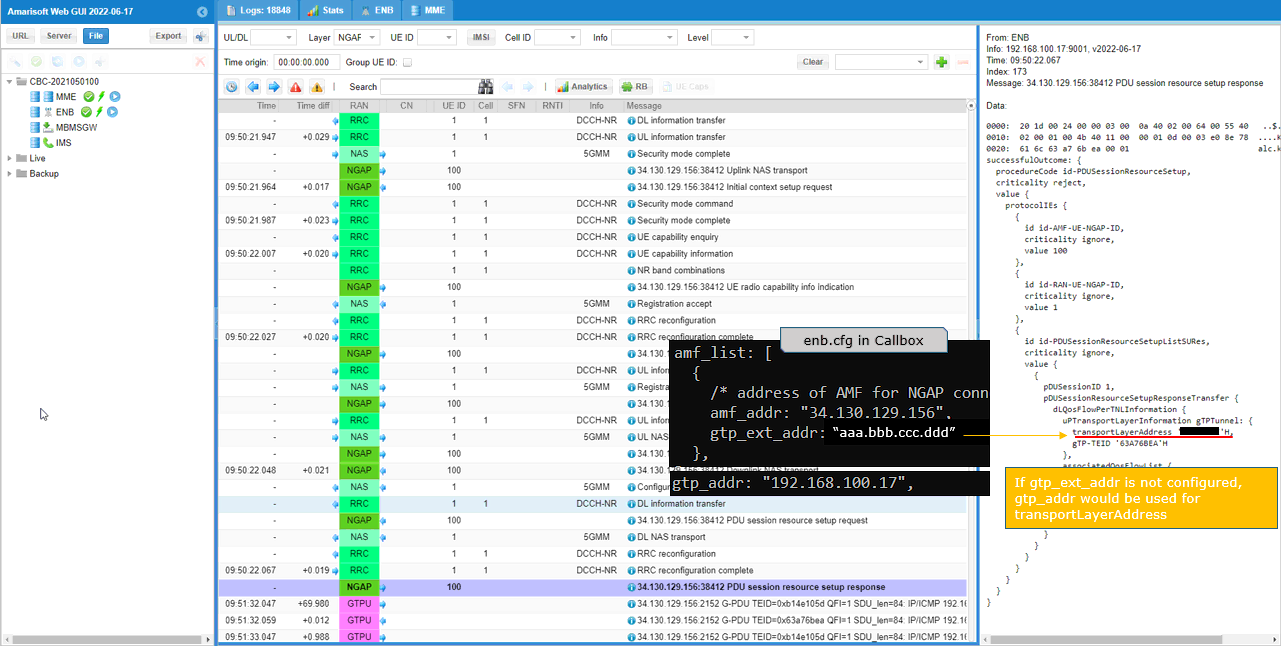

The key point for this configuration is to use gtp_ext_addr parameter to specify (external IP, Public IP). The external (public) IP address for Google Cloud is displayed in virtual machine information. The public IP of your own network (aaa.bbb.ccc.ddd) can be obtained by searching 'my ip address' in Google Search.

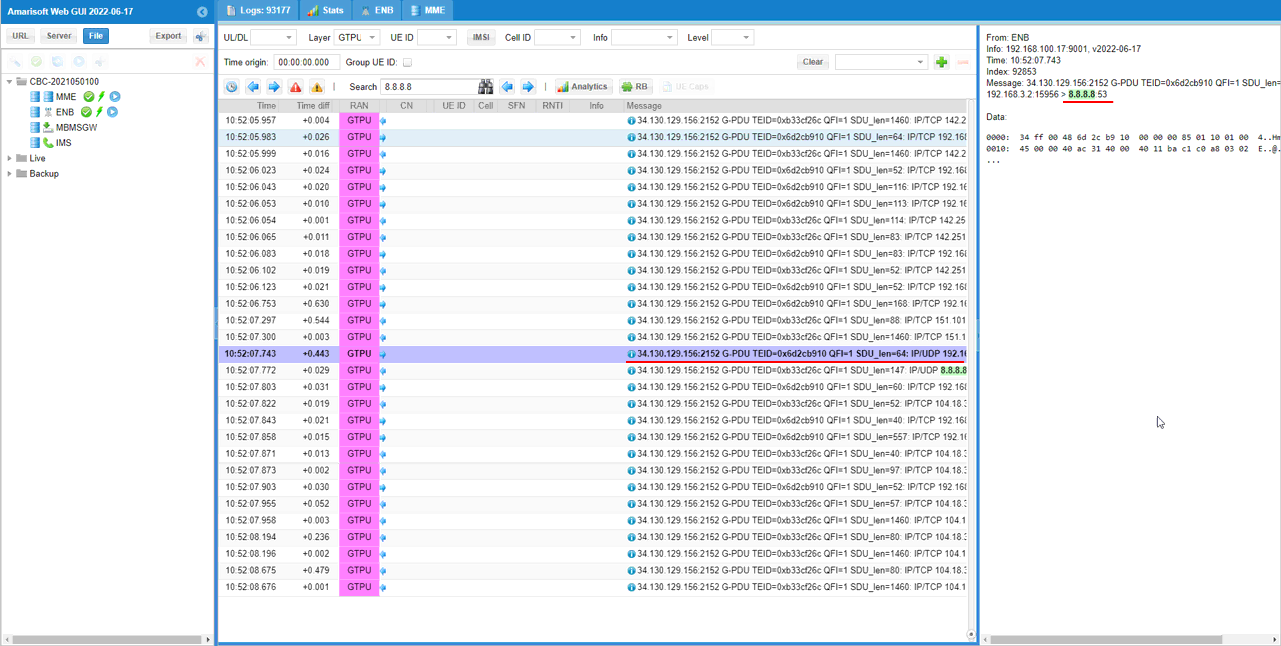

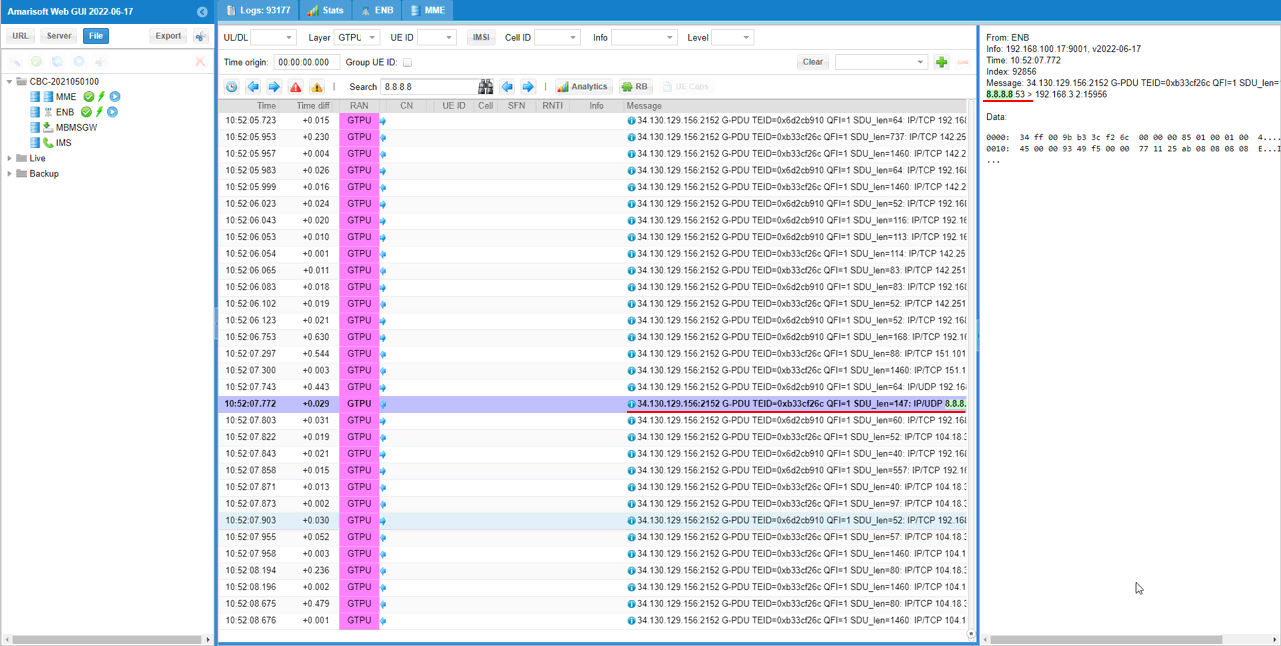

Following is the sample log where Amari UE sim is used as DUT and ping from UEsim to Core network on cloud.

You see the gtp_ext_addr value of the configuration file(mme.cfg on Cloud) is set as transportLayerAddress of PDU session resource setup request NGAP message. If the gtp_ext_addr is not set in the configuration file, gtp_addr is used as transportLayerAddress. The gtp_ext_addr should be the external IP (public IP) to which your cloud PC is associated.

In the same way, the gtp_ext_addr in enb.cfg is set as transportLayerAddress of PDU session resource setup response NGAP message. If you don't specify gtp_ext_addr in the configuration file, gtp_addr will be set as transportLayerAddress. gtp_ext_addr should be the public IP address that your callbox is connected to. (

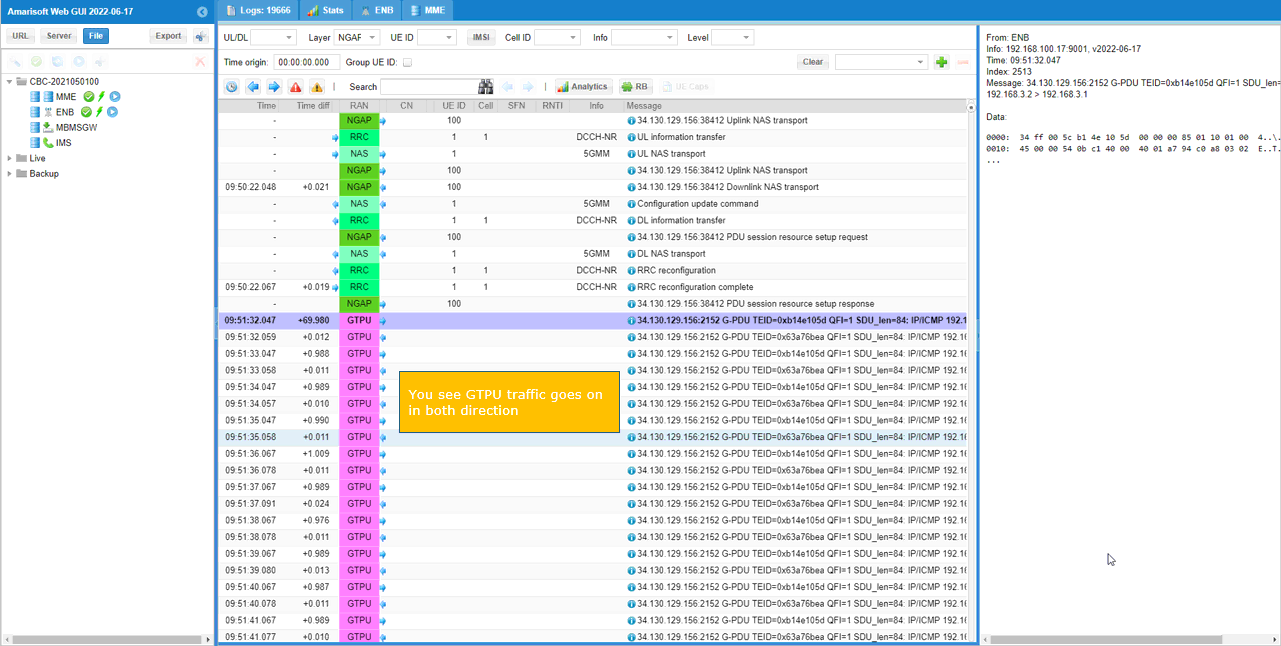

Try a some IP traffic (e.g, ping) between mme and gNB/eNB, make it sure that the traffic goes through in both directions via GTPU.

Following is the sample log where a Commercial UE is used as DUT and user traffic is internet traffic (e.g, Browsing, YouTube.)